Introduction

Geometric distribution is used to model the situation where we are interested in finding the probability of number failures before first success or number of trials (attempts) to get first success in a repeated mutually independent Beronulli’s trials, each with probability of success $p$.

There are two different definitions of geometric distributions one based on number of failures before first success and other based on number of trials (attempts) to get first success. The choice of the definition is a matter of the context.

In this tutorial we will discuss about various properties of geometric distribution along with their theoretical proofs.

Geometric Distribution

Consider a series of mutually independent Bernoulli’s trials with constant probability of success $p$ and probability of failure $q =1-p$.

Let random variable $X$ denote the number of failures before first success. Then the random variable $X$ take the values $x=0,1,2,\ldots$.

For getting $x$ failures before first success we required $(x+1)$ Bernoulli trials with outcomes $FF\cdots (x \text{ times}) S$.

$$ \begin{eqnarray*} P(\text{$x$ failures and then success}& = & P(FF\cdots (x \text{ times})S)\\ P(X=x) & = & q\cdot q\cdots \text{ ($x$ times) } \cdot p\\ & = & q^x p,\quad x=0,1,2\ldots\\ & & \quad 0<p<1, q=1-p. \end{eqnarray*} $$

The name geometric distribution is given because various probabilities for $x=0,1,2,\cdots$ are the terms from geometric progression.

Definition of geometric distribution

A discrete random variable $X$ is said to have geometric

distribution with parameter $p$ if its probability mass function is given by

$$ \begin{equation*} P(X=x) =\left\{ \begin{array}{ll} q^x p, & \hbox{$x=0,1,2,\ldots$} \\ & \hbox{$0<p<1$, $q=1-p$} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

Clearly, $P(X=x)\geq 0$ for all $x$ and

$$ \begin{eqnarray*} \sum_{x=0}^\infty pq^x &=& p\sum_{x=0}^\infty q^x \\ &=& p(1-q)^{-1} \\ &=& p\cdot p^{-1}=1. \end{eqnarray*} $$

Hence $P(X=x)$ is a legitimate probability mass function.

Key features of Geometric Distribution

- A random experiment consists of repeated trials.

- Each trial of an experiment has two possible outcomes, like success and failure.

- The probability of success ($p$) is constants for each trial.

- The trials are independent of each other.

- The random variable $X$ is the number of failures before getting first success $(X = 0,1,2,\cdots)$ OR the number of trials to get first success $(X = 1,2,\cdots)$.

Distribution Function of Geometric Distribution

The distribution function of geometric distribution is $F(x)=1-q^{x+1}, x=0,1,2,\cdots$.

Proof

The distribution function of geometric random variable is given by

$$ \begin{aligned} F(x)&=P(X\leq x)\\ &=1- P(X> x)\\ &=1-\sum_{x=x+1}^\infty pq^x\\ &=1-p(q^{x+1}+q^{x+2}+q^{x+3}+\cdots)\\ &=1-pq^{x+1}(1+q^{1}+q^{2}+\cdots)\\ &=1-pq^{x+1}(1-q)^{-1}\\ &=1-pq^{x+1}p^{-1}\\ &=1-q^{x+1}, x=0,1,2,\cdots \end{aligned} $$

Another Form of Geometric Distribution

Sometimes a geometric random variable can be defined as the number of trials (attempts) till the first success, including the trial on which the success occurs. In such situation, the p.m.f. of geometric random variable $X$ is given by

$$ \begin{equation*} P(X=x) =\left\{ \begin{array}{ll} q^{x-1} p, & \hbox{$x=1,2,\ldots$} \\ & \hbox{$0<p<1$, $q=1-p$} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

The mean for this form of geometric distribution is $E(X) = \dfrac{1}{p}$ and variance is

$\mu_2 = \dfrac{q}{p^2}$. The distribution function of this form of geometric distribution is $F(x) = 1-q^x,x=1,2,\cdots$. The moment generating function for this form is $M_X(t) = pe^t(1-qe^t)^{-1}$.

- The binomial distribution counts the number of successes in a fixed number of trials $(n)$. But geometric distribution counts the number of trials (attempts) required to get a first success.

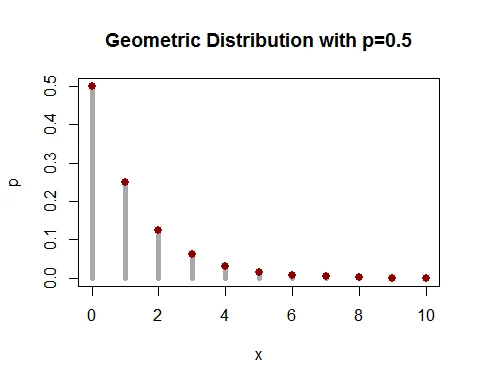

Graph of Geometric Distribution with $p=0.5$.

Following graph shows the probability mass function of geometric distribution with parameter $p=0.5$.

Mean of Geometric Distribution

The mean of Geometric distribution is $E(X)=\dfrac{q}{p}$.

Proof

The mean of geometric random variable $X$ is given by

$$ \begin{eqnarray*} \mu_1^\prime =E(X) &=& \sum_{x=0}^\infty x\cdot P(X=x) \\ &=& \sum_{x=0}^\infty x\cdot pq^x \\ &=& pq \sum_{x=1}^\infty x\cdot q^{x-1} \\ &=& pq(1-q)^{-2}\\ &=& \frac{q}{p}. \end{eqnarray*} $$

Variance of Geometric Distribution

The variance of Geometric distribution is $V(X)=\dfrac{q}{p^2}$.

Proof

The variance of geometric random variable $X$ is given by

$$ \begin{equation*} V(X) = E(X^2) - [E(X)]^2. \end{equation*} $$

Let us find the expected value of $X^2$.

$$ \begin{eqnarray*} E(X^2) & = & E[X(X-1)+X]\\ &=& E[X(X-1)] +E(X)\\ &=&\sum_{x=1}^\infty x(x-1) P(X=x) +\frac{q}{p}\\ &=& \sum_{x=2}^\infty x(x-1)pq^x +\frac{q}{p}\\ &=& pq^2 \sum_{x=2}^\infty x(x-1)q^{x-2}+\frac{q}{p}\\ &=& 2pq^2 \sum_{x=2}^\infty \frac{x(x-1)}{2\times 1}q^{x-2} +\frac{q}{p}\\ &=& 2pq^2 (1-q)^{-3}+\frac{q}{p}\\ &=& \frac{2q^2}{p^2} +\frac{q}{p}. \end{eqnarray*} $$

Now,

$$ \begin{eqnarray*} V(X) &=& E(X^2)-[E(X)]^2 \\ &=& \frac{2q^2}{p^2}+\frac{q}{p}-\frac{q^2}{p^2}\\ &=& \frac{q^2}{p^2}+\frac{q}{p}\\ &=&\frac{q}{p^2}. \end{eqnarray*} $$

For geometric distribution, variance > mean.

MGF of Geometric Distribution

The moment generating function of geometric distribution is $M_X(t) = p(1-qe^t)^{-1}$.

Proof

$$ \begin{eqnarray*} M_X(t) &=& E(e^{tX})\\ &=& \sum_{x=0}^\infty e^{tx} P(X=x) \\ &=& \sum_{x=0}^\infty e^{tx} q^x p\\ &=& p\sum_{x=0}^\infty (qe^{t})^x\\ &=& p(1-qe^t)^{-1} \qquad \bigg(\text{ $\because \sum_{x=0}^\infty q^x = (1-q)^{-1}$}\bigg). \end{eqnarray*} $$

Mean and variance from M.G.F.

The mean and variance of geometric distribution can be obtained using moment generating function as follows

$$ \begin{eqnarray*} \text{Mean }=\mu_1^\prime &=& \bigg[\frac{d}{dt} M_X(t)\bigg]_{t=0}\\ &=& \bigg[\frac{d}{dt} p(1-qe^t)^{-1}\bigg]_{t=0} \\ &=& \big[pqe^t(1-qe^t)^{-2}\big]_{t=0} \\ &=& pq(1-q)^{-2}=\frac{q}{p}. \end{eqnarray*} $$

The second raw moment of geometric distribution can be obtained as

$$ \begin{eqnarray*} \mu_2^\prime &=& \bigg[\frac{d^2}{dt^2} M_X(t)\bigg]_{t=0}\\ &=&\bigg[\frac{d}{dt} pqe^t(1-qe^t)^{-2}\bigg]_{t=0} \\ &=& pq\bigg[2e^tqe^t(1-qe^t)^{-3}+(1-qe^t)^{-2}e^t\bigg]_{t=0} \\ &=& pq\big[ 2q(1-q)^{-3}+(1-q)^{-2}\big]\\ &=& \frac{2q^2}{p^2}+\frac{q}{p}. \end{eqnarray*} $$

Therefore, the variance of geometric distribution is

$$ \begin{eqnarray*} \text{Varaince }=\mu_2 &=& \mu_2^\prime-(\mu_1^\prime)^2 \\ &=& \frac{2q^2}{p^2}+\frac{q}{p} -\frac{q^2}{p^2}\\ &=& \frac{q^2}{p^2}+\frac{q}{p} =\frac{q}{p^2}. \end{eqnarray*} $$

Cumulant Generating Function

The cumulant generating function of geometric distribution is $K_{X}(t)=\log_e \bigg(\dfrac{p}{1-qe^t}\bigg)$.

Proof

The cumulant generating function of geometric distribution is

$$ \begin{eqnarray*} K_X(t) &=& \log_e M_X(t) \\ &=& \log_e \bigg( \frac{p}{1-qe^t}\bigg) \\ &=& -\log_e \bigg( \frac{1-qe^t}{p}\bigg)\\ &=& -\log_e \bigg(\frac{1}{p}-\frac{qe^t}{p}\bigg)\\ \end{eqnarray*} $$

$$ \begin{eqnarray*} K_X(t)&=& -\log_e\bigg[\frac{1}{p}-\frac{q}{p}\bigg(1+t +\frac{t^2}{2!}+ \frac{t^3}{3!}+\cdots \bigg) \bigg]\\ &=& -\log_e\bigg[\frac{1}{p}-\frac{q}{p}-\frac{q}{p}\bigg(t +\frac{t^2}{2!}+ \frac{t^3}{3!}+\cdots \bigg) \bigg]\\ &=& -\log_e\bigg[1-\frac{q}{p}\bigg(t +\frac{t^2}{2!}+ \frac{t^3}{3!}+\cdots \bigg) \bigg]\\ &=& \bigg[\frac{q}{p}\bigg(t +\frac{t^2}{2!}+ \frac{t^3}{3!}+\cdots \bigg)+\bigg(\frac{q}{p}\bigg)^2\frac{1}{2}\bigg(t +\frac{t^2}{2!}+ \frac{t^3}{3!}+\cdots \bigg)^2\\ & &+\bigg(\frac{q}{p}\bigg)^3\frac{1}{3}\bigg(t +\frac{t^2}{2!}+ \frac{t^3}{3!}+\cdots \bigg)^3\\ & & +\bigg(\frac{q}{p}\bigg)^4\frac{1}{4}\bigg(t +\frac{t^2}{2!}+ \frac{t^3}{3!}+\cdots \bigg)^4+\cdots \bigg] \end{eqnarray*} $$

The cumulant of geometric distribution are

$$ \begin{eqnarray*} \kappa_1 &=&\mu_1^\prime \\ &=& \text{coefficient of $t$ in the expansion of $K_X(t)$}\\ &=& \frac{q}{p}. \end{eqnarray*} $$

$$ \begin{eqnarray*} \kappa_2 &= &\mu_2 \\ &=& \text{coefficient of $\frac{t^2}{2!}$ in the expansion of $K_X(t)$} \\ &=& \frac{q}{p}+ \frac{q^2}{p^2} \\ &=& \frac{q}{p^2}. \end{eqnarray*} $$

$$ \begin{eqnarray*} \kappa_3 &=&\mu_3\\ &=& \text{coefficient of $\frac{t^3}{3!}$ in the expansion of $K_X(t)$} \\ &=& \frac{q}{p}+\frac{3q^2}{p^2}+\frac{2q^3}{p^3} \\ &=& \frac{q}{p}+\frac{q^2}{p^2} +\frac{2q^2}{p^2} +\frac{2q^3}{p^3}\\ &=& \frac{q}{p^2} +\frac{2q^2}{p^3}(p+q)\\ &=& \frac{q}{p^3}(p+2q)= \frac{q}{p^3}(1+q). \end{eqnarray*} $$

$$ \begin{eqnarray*} \kappa_4 &=& \mu_4-3k_2^2\\ &=& \text{coefficient of $\frac{t^4}{4!}$ in the expansion of $K_X(t)$} \\ &=& \frac{q}{p}+(\frac{3q^2}{p^2}+\frac{4q^2}{p^2}) + (\frac{6q^3}{p^3}+\frac{6q^3}{p^3}) + \frac{6q^4}{p^4} \\ &=& \frac{q}{p}+\frac{q^2}{p^2}+\frac{6q^2}{p^2} + \frac{6q^3}{p^3}+\frac{6q^3}{p^3} + \frac{6q^4}{p^4} \\ &=& \frac{q}{p^2}(p+q)+\frac{6q^2}{p^3}(p+q) + \frac{6q^3}{p^4}(p+q)\\ &=& \frac{q}{p^2}+\frac{6q^2}{p^3} + \frac{6q^3}{p^4}\\ &=& \frac{q}{p^2}+\frac{6q^2}{p^4}(p+q)\\ &=& \frac{q}{p^4}(p^2+6q)\\ &=& \frac{q}{p^4}(1+4q+q^2). \end{eqnarray*} $$

Hence

$$ \begin{eqnarray*} \mu_4 & = & \kappa_4 + 3\kappa_2^2\\ &=&\frac{q}{p^4}(1+4q+q^2)+ \frac{3q^2}{p^4}\\ &=& \frac{q}{p^4}( 1 + 7q +q^2). \end{eqnarray*} $$

Cumulants can also be determine using the following method:

$$ \begin{eqnarray*} \kappa_1 = \mu_1^\prime &=& \bigg[\frac{d}{dt} K_X(t)\bigg]_{t=0} \\ & = & \bigg[\frac{d}{dt} (\log_e p -\log_e(1-qe^t))\bigg]_{t=0} \\ &=& \bigg[-\frac{1}{(1-qe^t)}(-qe^t)\bigg]_{t=0}\\ &=& \frac{q}{1-q}\\ &=&\frac{q}{p}. \end{eqnarray*} $$

$$ \begin{eqnarray*} \kappa_2= \mu_2 &=& \bigg[\frac{d^2}{dt^2} K_X(t)\bigg]_{t=0}\\ & = & \bigg[\frac{d}{dt} \frac{qe^t}{(1-qe^t)}\bigg]_{t=0} \\ &=& \bigg[\frac{1}{(1-qe^t)^2}\big[ (1-qe^t) qe^t - qe^t(-qe^t)\big]\bigg]_{t=0} \\ &=& \frac{1}{(1-q)^2}\big[ (1-q)\cdot q+ q^2\big]\\ &=& \frac{q}{(1-q)^2}\\ &=&\frac{q}{p^2}. \end{eqnarray*} $$

Characteristics function of Geometric Distribution

The characteristics function of geometric distribution is $\phi_X(t)=p(1-qe^{it})^{-1}$.

Proof

The characteristics function of geometric distribution is

$$ \begin{eqnarray*} \phi_X(t) &=& E(e^{itX})\\ &=& \sum_{x=0}^\infty e^{itx} P(X=x) \\ &=& \sum_{x=0}^\infty e^{itx} q^x p \\ &=& p\sum_{x=0}^\infty (qe^{it})^x\\ &=& p(1-qe^{it})^{-1} \qquad \bigg(\text{ $\because \sum_{x=0}^\infty q^x = (1-q)^{-1}$}\bigg). \end{eqnarray*} $$

Recurrence Relation for probabilities

The recurrence relation to calculate probabilities of geometric distribution is

$$ \begin{equation*} P(X=x+1) = q\cdot P(X=x). \end{equation*} $$

Proof

We have, $P(X=x+1) = pq^{x+1}$ and $P(X=x) = pq^x$. Hence,

$$ \begin{equation*} % \nonumber to remove numbering (before each equation) \frac{P(X=x+1)}{P(X=x)} = \frac{pq^{x+1}}{pq^x} = q \end{equation*} $$

$$ \begin{equation*} \therefore P(X=x+1) = q\cdot P(X=x),\; x=0,1,2,\cdots. \end{equation*} $$

which is the recurrence relation for probability of geometric

distribution.

Probability generating function of Geometric Distribution

The probability generating function of geometric distribution is $P_X(t)=p(1-qt)^{-1}$.

Proof

The probability generating function is

$$ \begin{eqnarray*} P_X(t) &=& E(t^{X})\\ &=& \sum_{x=0}^\infty t^{x} P(X=x) \\ &=& \sum_{x=0}^\infty t^{x} q^x p\\ &=& p\sum_{x=0}^\infty (qt)^x\\ &=& p(1-qt)^{-1} \qquad \bigg(\text{ $\because \sum_{x=0}^\infty q^x = (1-q)^{-1}$}\bigg). \end{eqnarray*} $$

Now,

$$ \begin{eqnarray*} P_X(t) &=& p(1-qt)^{-1} \\ &=& p\sum_{x=0}^\infty q^xt^x \\ &=& (p+pqt+pq^2t^2+\cdots+pq^xt^x+\cdots) \end{eqnarray*} $$

Hence, the probability mass function of $X$ is

$$ \begin{eqnarray*} P(X=x) & = & \text{coefficient of $t^x$ in the expansion of $P_X(t)$}\\ & = & pq^x, \; x=0,1,2,\cdots,\; 0<p,q<1,\; p+q=1. \end{eqnarray*} $$

Lack of memory property of geometric distribution

Geometric distribution is the only discrete distribution that possesses the lack of memory property.

Suppose a system can fail only at a discrete points of time $0,1,2,\cdots$. Let the life time of a system be denoted by $X$ with the support {$0,1,2,\cdots $}.

Then the conditional probability that a system of age $m$ will survive at least $n$ additional unit of time is the probability that it will survive more than $n$ unit of time. That is,

$$ P(X\geq m+n | X\geq m) = P(X\geq n) $$

Proof

Let $X\sim Geo(p)$. The p.m.f. of $X$ is

$$ \begin{equation*} P(X=x) = pq^x, x=0,1,2,\cdots; 0\leq p\leq 1, q=1-p. \end{equation*} $$

$$ \begin{eqnarray*} P(X\geq m+n) &=& pq^{m+n} + p q^{m+n+1} + pq^{m+n+2} +\cdots \\ &=& pq^{m+n}(1 + q + q^2 + \cdots)\\ & = & pq^{m+n} (1-q)^{-1}\\ & = & pq^{m+n} p^{-1} \\ &=& q^{m+n}. \end{eqnarray*} $$

$$ \begin{eqnarray*} P(X\geq m) &=& pq^{m} + p q^{m+1} + pq^{m+2} +\cdots \\ &=& pq^{m}(1 + q + q^2 + \cdots)\\ & = & pq^{m} (1-q)^{-1}\\ & = & pq^{m} p^{-1} \\ &=& q^{m}. \end{eqnarray*} $$

$$ \begin{eqnarray*} P(X\geq m+n |X\geq m) &=& \dfrac{P(X\geq m + n \cap X\geq m)}{P(X\geq m)}\\ &=& \dfrac{P(X\geq m+n \cap X\geq m)}{P(X\geq m)}\\ &=& \dfrac{P(X\geq m+n)}{P(X \geq m)} \\ &=& \dfrac{q^{m+n}}{q^m}\\ &=& q^n = P(X\geq n). \end{eqnarray*} $$

Result

Let two independent random variables $X_1$ and $X_2$ have same geometric distribution. Show that the conditional distribution of $X_1/(X_1+X_2)$ is uniform.

Proof

Given that $X_1$ and $X_2$ are independent random variable with same geometric distribution. The p.m.f. of $X$ is

$$ \begin{equation*} P(X=x) =\left\{ \begin{array}{ll} q^x p, & \hbox{$x=0,1,2,\ldots$} \\ & \hbox{$0<p,q<1$, $p+q=1$} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

Now,

$$ \begin{eqnarray*} P(X_1 + X_2 = n) &=& \sum_{x=0}^n P(X_1 = x) \cdot P(X_2=n-x)\\ & & \qquad (\because \text{ $X_1$ and $X_2$ are independent)} \\ &=& \sum_{x=0}^n q^x p q^{n-x} p = p^2q^n (n+1). \end{eqnarray*} $$

The conditional distribution of $X_1 / (X_1+X_2)$ is

$$ \begin{eqnarray*} P(X_1 = x| X_1+X_2= n) &=& \frac{P(X_1 = x, X_1+X_2 =n)}{ P(X_1 + X_2 = n) } \\ &=& \frac{P(X_1 = x, X_2=n-x)}{ P(X_1 + X_2 = n) } \\ &=& \frac{P(X_1 = x)\cdot P(X_2=n-x)}{P(X_1 + X_2 = n)}\\ &=&\frac{q^xp \cdot q^{n-x}p}{p^2q^n (n+1)}\\ &=& \frac{1}{n+1}, \; x=0,1,2, \cdots, n. \end{eqnarray*} $$

which is the p.m.f. of uniform distribution.

Hope this tutorial helps you understand theory of Geometric distribution.