Exponential distribution

Exponential Distribution Definition

A continuous random variable $X$ is said to have an exponential distribution with parameter $\theta$ if its p.d.f. is given by

$$ \begin{equation*} f(x)=\left\{ \begin{array}{ll} \theta e^{-\theta x}, & \hbox{$x\geq 0;\theta>0$;} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

In notation, it can be written as $X\sim \exp(\theta)$.

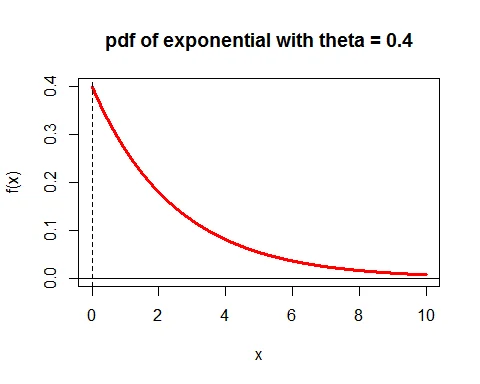

Graph of pdf of exponential distribution

Following is the graph of probability density function of exponential distribution with parameter $\theta=0.4$.

Second form of exponential distribution

Another form of exponential distribution is

$$ \begin{equation*} f(x)=\left\{ \begin{array}{ll} \frac{1}{\theta} e^{-\frac{x}{\theta}}, & \hbox{$x\geq 0;\theta>0$;} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

In notation, it can be written as $X\sim \exp(1/\theta)$.

Standard Exponential Distribution

The case where $\theta =1$ is called standard exponential distribution. The pdf of standard exponential distribution is

$$ \begin{equation*} f(x)=\left\{ \begin{array}{ll} e^{-x}, & \hbox{$x\geq 0$;} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

Distribution Function of Exponential Distribution

The distribution function of an exponential random variable is

$$ \begin{equation*} F(x)=\left\{ \begin{array}{ll} 1- e^{-\theta x}, & \hbox{$x\geq 0;\theta>0$;} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

Proof

The distribution function of an exponential random variable is

$$ \begin{eqnarray*} F(x) &=& P(X\leq x) \\ &=& \int_0^x f(x)\;dx\\ &=& \theta \int_0^x e^{-\theta x}\;dx\\ &=& \theta \bigg[-\frac{e^{-\theta x}}{\theta}\bigg]_0^x \\ &=& 1-e^{-\theta x}. \end{eqnarray*} $$

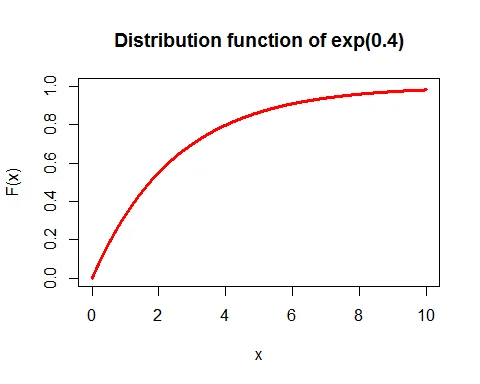

Graph of cdf of exponential distribution

Following is the graph of cumulative density function of exponential distribution with parameter $\theta=0.4$.

Mean of Exponential Distribution

The mean of an exponential random variable is $E(X) = \dfrac{1}{\theta}$.

Proof

The expected value of an exponential random variable is

$$ \begin{eqnarray*} E(X) &=& \int_0^\infty x\theta e^{-\theta x}\; dx\\ &=& \theta \int_0^\infty x^{2-1}e^{-\theta x}\; dx\\ &=& \theta \frac{\Gamma(2)}{\theta^2}\;\quad (\text{Using }\int_0^\infty x^{n-1}e^{-\theta x}\; dx = \frac{\Gamma(n)}{\theta^n} )\\ &=& \frac{1}{\theta} \end{eqnarray*} $$

Variance of Exponential Distribution

The variance of an exponential random variable is $V(X) = \dfrac{1}{\theta^2}$.

Proof

The variance of random variable $X$ is given by

$$ \begin{equation*} V(X) = E(X^2) - [E(X)]^2. \end{equation*} $$

Let us find the expected value of $X^2$.

$$ \begin{eqnarray*} E(X^2) &=& \int_0^\infty x^2\theta e^{-\theta x}\; dx\\ &=& \theta \int_0^\infty x^{3-1}e^{-\theta x}\; dx\\ &=& \theta \frac{\Gamma(3)}{\theta^3}\;\quad (\text{Using }\int_0^\infty x^{n-1}e^{-\theta x}\; dx = \frac{\Gamma(n)}{\theta^n} )\\ &=& \frac{2}{\theta^2} \end{eqnarray*} $$

Thus,

$$ \begin{eqnarray*} V(X) &=& E(X^2) -[E(X)]^2\\ &=&\frac{2}{\theta^2}-\bigg(\frac{1}{\theta}\bigg)^2\\ &=&\frac{1}{\theta^2}. \end{eqnarray*} $$

Raw Moments of Exponential Distribution

The $r^{th}$ raw moment of an exponential random variable is

$$ \begin{equation*} \mu_r^\prime = \frac{r!}{\theta^r}. \end{equation*} $$

Proof

The $r^{th}$ raw moment of exponential random variable is

$$ \begin{eqnarray*} \mu_r^\prime &=& E(X^r) \\ &=& \int_0^\infty x^r\theta e^{-\theta x}\; dx\\ &=& \theta \int_0^\infty x^{(r+1)-1}e^{-\theta x}\; dx\\ &=& \theta \frac{\Gamma(r+1)}{\theta^3}\;\quad (\text{Using }\int_0^\infty x^{n-1}e^{-\theta x}\; dx = \frac{\Gamma(n)}{\theta^n} )\\ &=& \frac{r!}{\theta^r}\;\quad (\because \Gamma(n) = (n-1)!) \end{eqnarray*} $$

Moment generating function of Exponential Distribution

The moment generating function of an exponential random variable is

$$ \begin{equation*} M_X(t) = \bigg(1- \frac{t}{\theta}\bigg)^{-1}, \text{ (if $t<\theta$}) \end{equation*} $$

Proof

The moment generating function of an exponential random variable is

$$ \begin{eqnarray*} M_X(t) &=& E(e^{tX}) \\ &=& \int_0^\infty e^{tx}\theta e^{-\theta x}\; dx\\ &=& \theta \int_0^\infty e^{-(\theta-t) x}\; dx\\ &=& \theta \bigg[-\frac{e^{-(\theta-t) x}}{\theta-t}\bigg]_0^\infty\\ &=& \frac{\theta }{\theta-t}\bigg[-e^{-\infty} +e^{0}\bigg]\\ &=& \frac{\theta }{\theta-t}\bigg[-0+1\bigg]\\ &=& \frac{\theta }{\theta-t}, \text{ (if $t<\theta$})\\ &=& \bigg(1- \frac{t}{\theta}\bigg)^{-1}. \end{eqnarray*} $$

Characteristic Function of Exponential Distribution

The characteristics function of an exponential random variable is

$$ \begin{equation*} \phi_X(t) = \bigg(1- \frac{it}{\theta}\bigg)^{-1}, \text{ (if $t<\theta$}) \end{equation*} $$

Proof

The characteristics function of an exponential random variable is

$$ \begin{eqnarray*} \phi_X(t) &=& E(e^{itX}) \\ &=& \int_0^\infty e^{itx}\theta e^{-\theta x}\; dx\\ &=& \theta \int_0^\infty e^{-(\theta -it) x}\; dx\\ &=& \theta \bigg[-\frac{e^{-(\theta-it) x}}{\theta-it}\bigg]_0^\infty\\ & & \text{ (integral converge only if $t<\theta$})\\ &=& \frac{\theta }{\theta-it}\bigg[-e^{-\infty} +e^{0}\bigg]\\ &=& \frac{\theta }{\theta-it}\bigg[-0+1\bigg]\\ &=& \frac{\theta }{\theta-it}, \text{ (if $t<\theta$})\\ &=& \bigg(1- \frac{it}{\theta}\bigg)^{-1}. \end{eqnarray*} $$

Memoryless property of exponential distribution

An exponential distribution has the property that, for any $s\geq 0$ and $t\geq 0$, the conditional probability that $X > s + t$, given that $X > t$, is equal to the unconditional probability that $X > s$.

That is if $X\sim exp(\theta)$ and $s\geq 0, t\geq 0$,

$$ \begin{equation*} P(X>s+t|X>t] = P[X>s]. \end{equation*} $$

This property is known as memoryless property. Exponential distribution is the only continuous distribution having a memoryless property.

Proof

$$ \begin{eqnarray*} P(X>s+t|X>t] &=& \frac{P(X>s+t,X>t)}{P(X>t)}\\ &=&\frac{P(X>s+t)}{P(X>t)}\\ &=& \frac{e^{-\theta (s+t)}}{e^{-\theta t}}\\ &=& e^{-\theta s}\\ &=& P(X>s). \end{eqnarray*} $$

Example

Suppose the lifetime of a lightbulb has an exponential distribution with rate parameter 1/100 hours. The lightbulb has been on for 50 hours. Find the probability that the bulb survives at least another 100 hours.

Solution

Let $X$ denote the lifetime of a lightbulb. $X\sim \exp(1/100)$.

The p.d.f. of $X$ is

$$ \begin{equation*} f(x)=\left\{ \begin{array}{ll} \frac{1}{100} e^{-\frac{x}{100}}, & \hbox{$x\geq 0$;} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

The lightbulb has been on for 50 hours.

The probability that the bulb survives at least another 100 hours is

$$ \begin{eqnarray*} P(X>150|X>50) &=& P(X>100+50|X>50)\\ &=& P(X>100)\\ & & \quad (\text{using memoryless property})\\ &=& 1-P(X\leq 100)\\ &=& 1-(1-F(100))\\ &=& F(100)\\ &=& e^{-100/100}\\ &=& e^{-1}\\ &=& 0.367879. \end{eqnarray*} $$

Sum of independent exponential variate is gamma variates

Let $X_i$, $i=1,2,\cdots, n$ be independent identically distributed exponential random variates with parameter $\theta$. Then the $\sum_{i=1}^n X_i$ follows gamma distribution.

Proof

The moment generating function of $X_i$ is

$$ \begin{equation*} M_{X_i}(t) = \bigg(1- \frac{t}{\theta}\bigg)^{-1}, \text{ (if $t<\theta$}) \end{equation*} $$

Let $Z = \sum_{i=1}^n X_i$.

Remember that the moment generating function of a sum of mutually independent random variables is just the product of their moment generating functions.

Then the moment generating function of $Z$ is

$$ \begin{eqnarray*} M_Z(t) &=& \prod_{i=1}^n M_{X_i}(t)\\ &=& \prod_{i=1}^n \bigg(1- \frac{t}{\theta}\bigg)^{-1}\\ &=& \bigg[\bigg(1- \frac{t}{\theta}\bigg)^{-1}\bigg]^n\\ &=& \bigg(1- \frac{t}{\theta}\bigg)^{-n}. \end{eqnarray*} $$

which is the moment generating function of gamma distribution with parameter $\theta$ and $n$. Hence, using Uniqueness Theorem of MGF $Z$ follows $G(\theta,n)$ distribution.