Hypergeometric Experiment

A hypergeometric experiment is an experiment which satisfies each of the following conditions:

- The population or set to be sampled consists of $N$ individuals, objects, or elements (a finite population).

- Each object can be characterized as a “defective” or “non-defective”, and there are $M$ defectives in the population.

- A sample of $n$ individuals is drawn in such a way that each subset of size $n$ is equally likely to be chosen.

Hypergeometric Distribution

Suppose we have a hypergeometric experiment. That is, there are $N$ units in the population and $M$ out of $N$ are defective, so $N-M$ units are non-defective.

Let $X$ denote the number of defectives in a completely random sample of size $n$ drawn from a population consisting of total $N$ units.

The total number of ways of finding $n$ units out of $N$ is $\binom{N}{n}$.

Out of $M$ defective units, $x$ defective units can be selected in $\binom{M}{x}$ ways and out of $N-M$ non-defective units, the remaining $(n-x)$ units can be selected in $\binom{N-M}{n-x}$ ways.

Hence, the probability of selecting $x$ defective units in a random sample of $n$ units out of $N$ is

$$ \begin{equation*} P(X=x) =\frac{\text{Favourable Cases}}{\text{Total Cases}} \end{equation*} $$

$$ \begin{equation*} \therefore P(X=x)=\frac{\binom{M}{x}\binom{N-M}{n-x}}{\binom{N}{n}},\;\; x=0,1,2,\cdots, n. \end{equation*} $$

The above distribution is called the hypergeometric distribution.

Notation: $X\sim H(n,M,N)$.

Definition of Hypergeometric Distribution

A discrete random variable $X$ is said to have hypergeometric distribution if its probability mass function is given by

$$ \begin{equation*} P(X=x)=\frac{\binom{M}{x}\binom{N-M}{n-x}}{\binom{N}{n}},\;\; x=0,1,2,\cdots, n. \end{equation*} $$

where:

- $N$ = total population size

- $M$ = number of success states in population

- $n$ = number of draws

- $x$ = number of observed successes

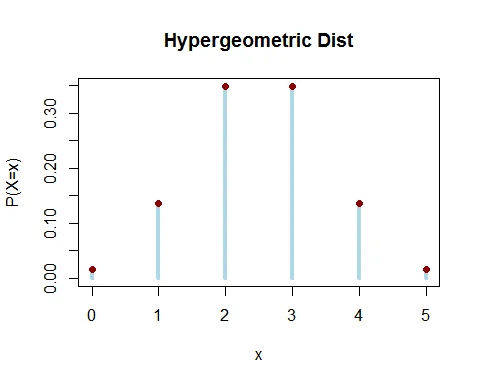

Graph of Hypergeometric Distribution

Following graph shows the probability mass function of hypergeometric distribution H(5,5,20).

Key Features of Hypergeometric Distribution

- There are $N$ units in the population. These $N$ units are classified as $M$ successes and remaining $N-M$ failures.

- Out of $N$ units, $n$ units are selected at random without replacement.

- $X$ is the number of successes in the sample.

Mean of Hypergeometric Distribution

The expected value of hypergeometric random variable is $E(X) =\dfrac{Mn}{N}$.

Proof

The expected value of hypergeometric random variable is

$$ \begin{eqnarray*} E(x) &=& \sum_{x=0}^n x\frac{\binom{M}{x}\binom{N-M}{n-x}}{\binom{N}{n}}\\ &=& 0+ \sum_{x=1}^n x\frac{\frac{M!}{x!(M-x)!}\binom{N-M}{n-x}}{\frac{N!}{n!(N-n)!}}\\ &=& \sum_{x=1}^n \frac{\frac{M(M-1)!}{(x-1)!(M-x)!}\binom{N-M}{n-x}}{\frac{N(N-1)!}{n(n-1)!(N-n)!}}\\ &=& \frac{Mn}{N}\sum_{x=1}^n\frac{\binom{M-1}{x-1}\binom{N-M}{n-x}}{\binom{N-1}{n-1}} \end{eqnarray*} $$

Let $x-1=y$. So for $x=1$, $y=0$ and for $x=n$, $y=n-1$. Therefore

$$ \begin{eqnarray*} \mu_1^\prime &=& \frac{Mn}{N}\sum_{y=0}^{n-1}\frac{\binom{M-1}{y}\binom{N-M}{n-y-1}}{\binom{N-1}{n-1}} \\ &=&\frac{Mn}{N}\times 1. \end{eqnarray*} $$

Hence, mean = $E(X) =\dfrac{Mn}{N}$.

Variance of Hypergeometric Distribution

The variance of a hypergeometric random variable is $V(X) = \dfrac{Mn(N-M)(N-n)}{N^2(N-1)}$.

Proof

The variance of random variable $X$ is given by

$$ \begin{equation*} V(X) = E(X^2) - [E(X)]^2. \end{equation*} $$

Let us find the expected value of $X(X-1)$.

$$ \begin{eqnarray*} E[X(X-1)]&=& \sum_{x=0}^n x(x-1)\frac{\binom{M}{x}\binom{N-M}{n-x}}{\binom{N}{n}}\\ &=& \sum_{x=2}^n x\frac{\frac{M!}{x!(M-x)!}\binom{N-M}{n-x}}{\frac{N!}{n!(N-n)!}}\\ &=& \frac{M(M-1)n(n-1)}{N(N-1)}\sum_{x=2}^n\frac{\binom{M-2}{x-2}\binom{N-M}{n-x}}{\binom{N-2}{n-2}} \end{eqnarray*} $$

Let $x-2=y$. So for $x=2$, $y=0$ and for $x=n$, $y=n-2$. Therefore

$$ \begin{eqnarray*} E[(X(X-1)]&=& \frac{M(M-1)n(n-1)}{N(N-1)}\times 1\\ & = &\frac{M(M-1)n(n-1)}{N(N-1)}. \end{eqnarray*} $$

Hence,

$$ \begin{eqnarray*} \text{Variance = }\mu_2 &=& E[X(X-1)]+E(X)-[E(X)]^2 \\ &=& \frac{M(M-1)n(n-1)}{N(N-1)}+ \frac{Mn}{N}- \frac{M^2n^2}{N^2} \\ &=& \frac{Mn(N-M)(N-n)}{N^2(N-1)}. \end{eqnarray*} $$

Binomial as Limiting Case

In hypergeometric distribution, if $N\to \infty$ and $\frac{M}{N}=p$, then the hypergeometric distribution tends to binomial distribution.

Proof

$$ \begin{eqnarray*} P(X=x) &=& \lim_{N\to\infty} \frac{\binom{M}{x}\binom{N-M}{n-x}}{\binom{N}{n}} \end{eqnarray*} $$

As $N \to \infty$ with $\frac{M}{N}=p$, this becomes the binomial distribution:

$$ P(X=x) = \binom{n}{x}p^x (1-p)^{n-x}, x=0,1,2,\cdots, n; \; 0<p<1. $$

Hypergeometric Distribution Examples

Example 1: Parking Lot Cars

Of the 20 cars in the parking lot, 7 are using diesel fuel and 13 gasoline. We randomly choose 6 cars.

a. What is the probability that 3 are using diesel? b. What is the probability that at least 2 are using diesel? c. What is the probability that at most 2 are using diesel?

Solution

Here $N=20$ total cars, $M=7$ using diesel, $N-M=13$ using gasoline, and $n=6$ cars selected.

Let $X$ denote the number of cars using diesel. The probability mass function is:

$$ \begin{aligned} P(X=x)&=\frac{\binom{7}{x}\binom{13}{6-x}}{\binom{20}{6}},\; \; x=0,1,2,\cdots, 6 \end{aligned} $$

a. Exactly 3 using diesel:

$$ \begin{aligned} P(X=3) &= \frac{\binom{7}{3}\binom{13}{3}}{\binom{20}{6}}\\ &= \frac{35\times 286}{38760}\\ &= 0.2583 \end{aligned} $$

b. At least 2 using diesel:

$$ \begin{aligned} P(X\geq 2) &= 1-P(X\leq 1)\\ &=1- \sum_{x=0}^{1}P(X=x)\\ &= 1-\big(P(X=0)+P(X=1)\big)\\ &=1-\bigg(\frac{\binom{7}{0}\binom{13}{6}}{\binom{20}{6}}+\frac{\binom{7}{1}\binom{13}{5}}{\binom{20}{6}}\bigg)\\ &=1-\bigg(\frac{1\times 1716}{38760}+\frac{7\times 1287}{38760}\bigg)\\ &= 1-\big(0.0443+0.2324\big)\\ &=1-0.2767\\ &= 0.7233 \end{aligned} $$

c. At most 2 using diesel:

$$ \begin{aligned} P(X\leq 2) &= \sum_{x=0}^{2}P(X=x)\\ &= P(X=0)+P(X=1)+P(X=2)\\ &=\frac{\binom{7}{0}\binom{13}{6}}{\binom{20}{6}}+\frac{\binom{7}{1}\binom{13}{5}}{\binom{20}{6}}+\frac{\binom{7}{2}\binom{13}{4}}{\binom{20}{6}}\\ &=\frac{1\times 1716}{38760}+\frac{7\times 1287}{38760}+\frac{21\times 715}{38760}\\ &= 0.0443+0.2324+0.3874\\ &=0.6641 \end{aligned} $$

Example 2: Job Selection

Suppose that 20 people apply for a job. If 10 people are hired at random:

a. What is the probability that the 10 selected will include all 5 most qualified applicants? b. What is the probability that 3 of the 5 most qualified applicants are among the 10 selected?

Solution

Here $N=20$ applicants, $M=5$ most qualified, $N-M=15$ others, and $n=10$ hired.

Let $X$ denote the number of most qualified applicants selected.

$$ \begin{aligned} P(X=x)&=\frac{\binom{5}{x}\binom{15}{10-x}}{\binom{20}{10}},\; \; x=0,1,2,\cdots, 5 \end{aligned} $$

a. All 5 most qualified included:

$$ \begin{aligned} P(X=5) &= \frac{\binom{5}{5}\binom{15}{5}}{\binom{20}{10}}\\ &= \frac{1\times 3003}{184756}\\ &= 0.0163 \end{aligned} $$

b. Exactly 3 most qualified included:

$$ \begin{aligned} P(X=3) &= \frac{\binom{5}{3}\binom{15}{7}}{\binom{20}{10}}\\ &= \frac{10\times 6435}{184756}\\ &= 0.3483 \end{aligned} $$

Example 3: Missile Firing

From a lot of 10 missiles, 4 are selected at random and fired. If the lot contains 3 defective missiles:

a. What is the probability that all 4 will fire? b. What is the probability that at most 2 will not fire?

Solution

Here $N=10$ missiles, $M=3$ defective, $n=4$ selected. Let $X$ = number of defective missiles.

$$ \begin{aligned} P(X=x)&=\frac{\binom{3}{x}\binom{7}{4-x}}{\binom{10}{4}},\; \; x=0,1,2,\cdots,3 \end{aligned} $$

a. All 4 will fire (0 defective):

$$ \begin{aligned} P(X=0) &= \frac{\binom{3}{0}\binom{7}{4}}{\binom{10}{4}}\\ &= \frac{1\times 35}{210}\\ &= 0.1667 \end{aligned} $$

Thus, there is a 16.67% chance that all 4 missiles will fire.

b. At most 2 defective:

$$ \begin{aligned} P(X\leq 2) &= \sum_{x=0}^{2}P(X=x)\\ &= \big(P(X=0)+P(X=1)+P(X=2)\big)\\ &=\bigg(\frac{\binom{3}{0}\binom{7}{4}}{\binom{10}{4}}+\frac{\binom{3}{1}\binom{7}{3}}{\binom{10}{4}}+\frac{\binom{3}{2}\binom{7}{2}}{\binom{10}{4}}\bigg)\\ &=\bigg(\frac{1\times 35}{210}+\frac{3\times 35}{210}+\frac{3\times 21}{210}\bigg)\\ &= \big(0.1667+0.5+0.3\big)\\ &= 0.9667 \end{aligned} $$

Thus, there is a 96.67% chance that at most 2 missiles will not fire.

Properties Summary Table

| Property | Formula |

|---|---|

| PMF | $P(X=x)=\frac{\binom{M}{x}\binom{N-M}{n-x}}{\binom{N}{n}}$ |

| Mean | $E(X) = \frac{Mn}{N}$ |

| Variance | $V(X) = \frac{Mn(N-M)(N-n)}{N^2(N-1)}$ |

| Range | $\max(0, n-N+M) \leq x \leq \min(n, M)$ |

| Limiting Distribution | Binomial as $N \to \infty$ |

When to Use Hypergeometric Distribution

The hypergeometric distribution is appropriate when:

- Sampling without replacement from a finite population

- Population is divided into two categories (success/failure, defective/good)

- We’re interested in the number of successes in a fixed sample

- Population size is relatively small

Common Applications:

- Quality control and inspection without replacement

- Lottery and raffle probability calculations

- Sampling from batches of manufactured items

- Selection of committee members from groups

- Epidemiological studies with finite populations

- Card probabilities in games

- Auditing and sample-based verification

Key Differences from Binomial

| Aspect | Hypergeometric | Binomial |

|---|---|---|

| Sampling | Without replacement | With replacement |

| Population | Finite and fixed | Infinite or very large |

| Trials | Dependent | Independent |

| Probability | Changes with each trial | Constant |

| Parameters | $N$, $M$, $n$ | $n$, $p$ |

Conclusion

The hypergeometric distribution is essential for probability calculations involving sampling without replacement from finite populations. It provides more realistic models than the binomial distribution for many practical scenarios, especially in quality control and auditing applications.