Introduction

The negative binomial distribution is based on an experiment which satisfies the following three conditions:

- An experiment consists of a sequence of independent Bernoulli trials (each trial results in success or failure)

- The probability of success is constant from trial to trial: $P(S \text{ on trial } i) = p$ for $i=1,2,3,\cdots$

- The experiment continues until a total of $r$ successes have been observed, where $r$ is a specified positive integer.

Thus, the negative binomial distribution models the number of failures that precede the $r^{th}$ success. If $X$ denotes the number of failures before the $r^{th}$ success, then $X$ is called a negative binomial random variable.

Negative Binomial Distribution

Consider a sequence of independent repetitions of a Bernoulli trial with constant probability $p$ of success and $q$ of failure. Let the random variable $X$ denote the total number of failures before the $r^{th}$ success, where $r$ is a fixed positive integer.

Number of ways of getting $(r-1)$ successes out of $(x+r-1)$ trials equals $\binom{x+r-1}{r-1}$.

Hence, the probability of $x$ failures before $r$ successes is given by

$$ \begin{eqnarray*} P(X=x) &=& P[(r-1) \text{ success in } (x+r-1) \text{ trials }]\\ & & \quad \times P[r^{th} \text{ success in } (x+r) \text{ trials}] \\ &=& \binom{x+r-1}{r-1} p^{r-1} q^{x} \times p \\ &=& \binom{x+r-1}{r-1} p^{r} q^{x},\quad x=0,1,2,\ldots \end{eqnarray*} $$

Definition of Negative Binomial Distribution

A discrete random variable $X$ is said to have negative binomial distribution if its p.m.f. is given by

$$ \begin{eqnarray*} P(X=x)&= \binom{x+r-1}{r-1} p^{r} q^{x},\quad x=0,1,2,\ldots; r=1,2,\ldots\\ & & \qquad\qquad \qquad 0<p, q<1, p+q=1. \end{eqnarray*} $$

Key Features of Negative Binomial Distribution

- A random experiment consists of repeated trials.

- Each trial has two possible outcomes: success and failure.

- The probability of success ($p$) remains constant for each trial.

- Trials are independent of each other.

- The random variable is $X$: the number of failures before getting the $r^{th}$ success.

Alternative Forms

Second Form

An alternative form of the p.m.f. can be obtained:

$$ \begin{eqnarray*} P(X=x) & = & (-1)^x \binom{-r}{x} p^r (-q)^x \\ & = & \binom{-r}{x} p^r (-q)^x, \quad x=0,1,2,\ldots \end{eqnarray*} $$

This form is known as the negative binomial distribution because of the negative index.

Third Form

Let $p = \dfrac{1}{Q}$ and $q=\dfrac{P}{Q}$, so $Q-P=1$.

Then the p.m.f. can be written as

$$ P(X=x) = \binom{-r}{x} Q^{-r} (-P/Q)^x, \quad x=0,1,2,\ldots $$

Geometric as Particular Case

In negative binomial distribution, if $r=1$:

$$ P(X=x) = q^x p, \quad x=0,1,2,\ldots $$

which is the p.m.f. of geometric distribution. Hence, geometric distribution is a particular case of negative binomial distribution.

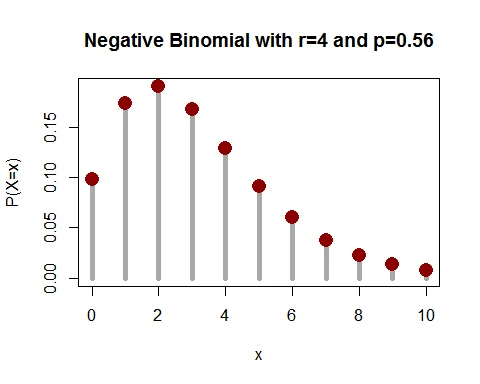

Graph of Negative Binomial Distribution

Following graph shows the probability mass function of negative binomial distribution with parameters $r=4$ and $p=0.56$.

Mean of Negative Binomial Distribution

The mean of negative binomial distribution is $E(X)=\dfrac{rq}{p}$.

Proof

The mean of random variable $X$ is:

$$ \begin{eqnarray*} \mu_1^\prime =E(X) &=& \sum_{x=0}^\infty x\cdot P(X=x) \\ &=& \sum_{x=0}^\infty x\cdot \binom{-r}{x} p^r (-q)^x\\ &=& p^r(-r)(-q)\sum_{x=1}^\infty \binom{-r-1}{x-1}\cdot (-q)^{x-1}\\ &=& p^r rq(1-q)^{-r-1}\\ &=& \frac{rq}{p}. \end{eqnarray*} $$

Variance of Negative Binomial Distribution

The variance of negative binomial distribution is $V(X)=\dfrac{rq}{p^2}$.

Proof

The variance of random variable $X$ is:

$$ V(X) = E(X^2) - [E(X)]^2 $$

Let us find the expected value $X^2$:

$$ \begin{eqnarray*} E(X^2) &=& E[X(X-1)+X] \\ &=& E[X(X-1)]+E(X)\\ &=& p^r (-r)(-r-1)(-q)^2 \sum_{x=2}^\infty \binom{-r-2}{x-2}(-q)^{x-2}+\frac{rq}{p}\\ & =& r(r+1)q^2p^r (1-q)^{-r-2}+\frac{rq}{p}\\ &=& \frac{r(r+1)q^2}{p^2}+\frac{rq}{p}. \end{eqnarray*} $$

Now,

$$ \begin{eqnarray*} V(X) &=& E(X^2)-[E(X)]^2 \\ &=& \frac{r(r+1)q^2}{p^2}+\frac{rq}{p}-\frac{r^2q^2}{p^2}\\ &=& \frac{rq^2}{p^2}+\frac{rq}{p}\\ &=&\frac{rq}{p^2}. \end{eqnarray*} $$

Note: For negative binomial distribution, $V(X)> E(X)$.

Moment Generating Function

The MGF of negative binomial distribution is $M_X(t)=\big(Q-Pe^{t}\big)^{-r}$.

Proof

The MGF is:

$$ \begin{eqnarray*} M_X(t) &=& E(e^{tX}) \\ &=& \sum_{x=0}^\infty e^{tx} \binom{-r}{x} Q^{-r} (-P/Q)^x \\ &=& Q^{-r} \bigg(1-\frac{Pe^{t}}{Q}\bigg)^{-r}\\ &=& \big(Q-Pe^{t}\big)^{-r}. \end{eqnarray*} $$

In terms of $p$ and $q$: $M_X(t) =p^r(1-qe^t)^{-r}$.

Mean and Variance Using MGF

$$ \begin{eqnarray*} \mu_1^\prime &=& \bigg[\frac{d}{dt} M_X(t)\bigg]_{t=0}\\ &=& rP. \end{eqnarray*} $$

In terms of $p$ and $q$: mean $= \dfrac{rq}{p}$.

$$ \begin{eqnarray*} \mu_2^\prime &=& \bigg[\frac{d^2}{dt^2} M_X(t)\bigg]_{t=0}\\ &=& r(r+1)P^2 +rP. \end{eqnarray*} $$

Therefore, variance $= r(r+1)P^2 +rP-(rP)^2 = rPQ$.

In terms of $p$ and $q$: variance $= \dfrac{rq}{p^2}$.

Cumulant Generating Function

The CGF of negative binomial distribution is $K_X(t)=-r\log_e(Q-Pe^t)$.

Characteristics Function

The characteristics function of negative binomial distribution is

$\phi_X(t) = p^r (1-qe^{it})^{-r}$.

Recurrence Relation for Probabilities

The recurrence relation for probabilities is:

$$ \begin{equation*} P(X=x+1) = \frac{(x+r)}{(x+1)} q \cdot P(X=x),\quad x=0,1,\cdots \end{equation*} $$

where $P(X=0) = p^r$.

Poisson as Limiting Case

Negative binomial distribution $NB(r, p)$ tends to Poisson distribution as $r\to \infty$ and $P\to 0$ with $rP = \lambda$ (finite).

The limiting distribution is:

$$ \lim_{r\to \infty, P\to 0} P(X=x) = \frac{e^{-\lambda}\lambda^x}{x!} $$

which is the Poisson distribution with parameter $\lambda = rP$.

Negative Binomial Distribution Examples

Example 1: Defective Tires

A large lot of tires contains 5% defectives. 4 tires are to be chosen for a car.

a. Find the probability that you find 2 defective tires before 4 good ones. b. Find the probability that you find at most 2 defective tires before 4 good ones. c. Find the mean and variance of the number of defective tires before 4 good ones.

Solution

Let $X$ = number of defective tires before 4 good tires. Probability of good tire is $p=0.95$.

$X \sim NB(4, 0.95)$.

The probability mass function is:

$$ \begin{aligned} P(X=x)&= \binom{x+3}{x} (0.95)^{4} (0.05)^{x},\quad x=0,1,2,\ldots \end{aligned} $$

a. Exactly 2 defective tires:

$$ \begin{aligned} P(X=2)&= \binom{5}{2} (0.95)^{4} (0.05)^{2}\\ &= 10*(0.00204)\\ &= 0.0204 \end{aligned} $$

b. At most 2 defective tires:

$$ \begin{aligned} P(X\leq 2)&=\sum_{x=0}^{2}P(X=x)\\ &= 1*(0.8145)+4*(0.04073)+10*(0.00204)\\ &= 0.8145+0.1629+0.0204\\ &=0.9978 \end{aligned} $$

c. Mean and Variance:

$$ \begin{aligned} E(X) &= \frac{4*0.05}{0.95} = 0.2105 \end{aligned} $$

$$ \begin{aligned} V(X) &= \frac{4*0.05}{0.95^2} = 0.2216 \end{aligned} $$

Example 2: Family with Female Children

The probability of male birth is 0.5. A couple wishes to have children until they have exactly two female children.

a. Write the probability distribution of $X$, the number of male children before two female children. b. What is the probability that the family has four children? c. What is the probability that the family has at most four children? d. What is the expected number of male children? e. What is the expected total number of children?

Solution

Birth of female child is success, male child is failure. $p = 0.5$, $r = 2$.

$X \sim NB(2, 0.5)$.

a. Probability distribution:

$$ \begin{aligned} P(X=x)&= \binom{x+1}{x} (0.5)^{2} (0.5)^{x},\quad x=0,1,2,\ldots \end{aligned} $$

b. Four children means 2 male and 2 female:

$$ \begin{aligned} P(X=2) & = \frac{(2+1)!}{1!2!}(0.5)^{2}(0.5)^{2} \\ & = 0.1875 \end{aligned} $$

c. At most four children (at most 2 males before 2 females):

$$ \begin{aligned} P(X\leq 2) & = 0.25+ 0.25+0.1875\\ &= 0.6875 \end{aligned} $$

d. Expected number of male children:

$$ \begin{aligned} E(X)& = \frac{2\times0.5}{0.5} = 2 \end{aligned} $$

e. Expected total number of children:

$$ \begin{aligned} E(X+2)& = 2+2 = 4 \end{aligned} $$

Properties Summary Table

| Property | Formula |

|---|---|

| PMF | $P(X=x) = \binom{x+r-1}{r-1} p^r q^x$ |

| Mean | $E(X) = \frac{rq}{p}$ |

| Variance | $V(X) = \frac{rq}{p^2}$ |

| Standard Deviation | $\sigma = \sqrt{\frac{rq}{p^2}}$ |

| MGF | $M_X(t) = p^r(1-qe^t)^{-r}$ |

| Characteristic Function | $\phi_X(t) = p^r(1-qe^{it})^{-r}$ |

When to Use Negative Binomial Distribution

The negative binomial distribution is appropriate when:

- Counting the number of failures until a fixed number of successes

- Each trial has the same probability of success

- Trials are independent

- We’re interested in failures before achieving a target number of successes

Common Applications:

- Quality control: defective items before achieving required good items

- Clinical trials: number of treatment failures before achieving required successes

- Sales: number of rejections before achieving a target number of sales

- Manufacturing: production runs until achieving required quality standards

- Ecology: number of failures in observing rare species

- Reliability testing: number of failures before achieving target uptime

Key Relationships

- When $r=1$: Negative Binomial reduces to Geometric Distribution

- As $r\to \infty$: Negative Binomial approaches Normal Distribution

- As $r\to \infty$ and $p\to 0$: Negative Binomial approaches Poisson Distribution

Conclusion

The negative binomial distribution is crucial for modeling repeated trials until a fixed number of successes. Its flexibility in handling various values of $r$ makes it suitable for diverse real-world applications, particularly in quality control, epidemiology, and reliability engineering.