Introduction to Uniform Distribution

The uniform distribution is one of the simplest probability distributions where all values within a specified range have equal probability of occurrence. It’s characterized by a constant probability density across its support, which is why it’s sometimes called the rectangular distribution.

The uniform distribution comes in two main forms:

- Discrete Uniform Distribution - for discrete random variables

- Continuous Uniform Distribution - for continuous random variables

This comprehensive guide covers both variants with their properties, formulas, and practical applications.

Part 1: Discrete Uniform Distribution

Definition of Discrete Uniform Distribution

A discrete random variable $X$ is said to have a uniform distribution if its probability mass function (pmf) is given by

$$ \begin{aligned} P(X=x)&=\frac{1}{N},\;\; x=1,2, \cdots, N. \end{aligned} $$

This is the simplest case where $X$ takes values from 1 to $N$.

General Form of Discrete Uniform Distribution

A general discrete uniform distribution has a probability mass function:

$$ \begin{aligned} P(X=x)&=\frac{1}{b-a+1},\;\; x=a,a+1,a+2, \cdots, b. \end{aligned} $$

where $a$ is the minimum value and $b$ is the maximum value.

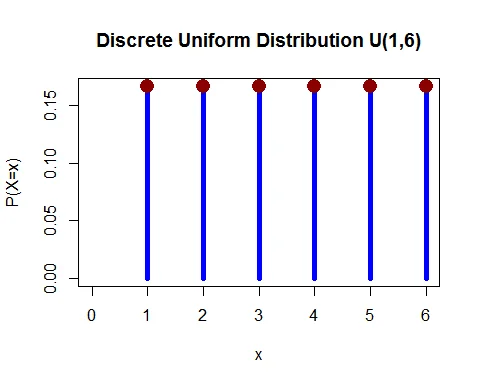

Graph of Discrete Uniform Distribution

Following graph shows the probability mass function of discrete uniform distribution $U(1,6)$ (rolling a fair die).

Mean of Discrete Uniform Distribution

For $X = 1$ to $N$:

The expected value of discrete uniform random variable is $E(X) =\dfrac{N+1}{2}$.

Proof

$$ \begin{aligned} E(X) &= \sum_{x=1}^N x\cdot P(X=x)\\ &= \frac{1}{N}\sum_{x=1}^N x\\ &= \frac{1}{N}(1+2+\cdots + N)\\ &= \frac{1}{N}\times \frac{N(N+1)}{2}\\ &= \frac{N+1}{2}. \end{aligned} $$

For general $X = a$ to $b$:

The expected value is $E(X) =\dfrac{a+b}{2}$.

Variance of Discrete Uniform Distribution

For $X = 1$ to $N$:

The variance of discrete uniform random variable is $V(X) = \dfrac{N^2-1}{12}$.

Proof

$$ \begin{equation*} V(X) = E(X^2) - [E(X)]^2. \end{equation*} $$

Let us find the expected value of $X^2$:

$$ \begin{eqnarray*} E(X^2) &=& \sum_{x=1}^N x^2\cdot P(X=x)\\ &=& \frac{1}{N}\sum_{x=1}^N x^2\\ &=& \frac{1}{N}\times \frac{N(N+1)(2N+1)}{6}\\ &=& \frac{(N+1)(2N+1)}{6}. \end{eqnarray*} $$

Now, the variance is:

$$ \begin{eqnarray*} V(X) & = & E(X^2) - [E(X)]^2\\ &=& \frac{(N+1)(2N+1)}{6}- \bigg(\frac{N+1}{2}\bigg)^2\\ &=& \frac{N+1}{2}\bigg[\frac{2N+1}{3}-\frac{N+1}{2} \bigg]\\ &=& \frac{N^2-1}{12}. \end{eqnarray*} $$

The standard deviation is $\sigma =\sqrt{\dfrac{N^2-1}{12}}$.

For general $X = a$ to $b$:

The variance is $V(X) = \dfrac{(b-a+1)^2-1}{12}$.

Distribution Function

The distribution function (CDF) of general discrete uniform distribution is:

$F(x) = P(X\leq x)=\frac{x-a+1}{b-a+1}; \quad a\leq x\leq b$.

Moment Generating Function

The MGF of discrete uniform distribution is:

$M_X(t) = \dfrac{e^t (1 - e^{tN})}{N (1 - e^t)}$.

Proof

$$ \begin{eqnarray*} M(t) &=& E(e^{tx})\\ &=& \sum_{x=1}^N e^{tx} \dfrac{1}{N} \\ &=& \dfrac{1}{N} \sum_{x=1}^N (e^t)^x \\ &=& \dfrac{1}{N} e^t \dfrac{1-e^{tN}}{1-e^t} \\ &=& \dfrac{e^t (1 - e^{tN})}{N (1 - e^t)}. \end{eqnarray*} $$

Discrete Uniform Distribution Examples

Example 1: Fair Die Roll

Roll a six-faced fair die. Suppose $X$ denotes the number appearing on top.

a. Find the probability that an even number appears. b. Find the probability that the number is less than 3. c. Compute mean and variance.

Solution

$X$ takes values $1,2,3,4,5,6$ and follows $U(1,6)$ distribution.

The probability mass function is:

$$ \begin{aligned} P(X=x)&=\frac{1}{6}, \; x=1,2,\cdots, 6. \end{aligned} $$

a. Probability of even number:

$$ \begin{aligned} P(X=\text{ even }) &=P(X=2)+P(X=4)+P(X=6)\\ &=\frac{1}{6}+\frac{1}{6}+\frac{1}{6}\\ &= 0.5 \end{aligned} $$

b. Probability less than 3:

$$ \begin{aligned} P(X < 3) &=P(X=1)+P(X=2)\\ &=\frac{1}{6}+\frac{1}{6}\\ &= 0.3333 \end{aligned} $$

c. Mean and Variance:

$$ E(X) = \frac{1+6}{2} = 3.5 $$

$$ V(X) = \frac{(6-1+1)^2-1}{12} = \frac{35}{12} = 2.9167 $$

Example 2: Last Digit of Phone Number

A telephone number is selected at random. $X$ is the last digit.

a. Probability that the last digit is 6 b. Probability that the last digit is less than 3 c. Probability that the last digit is ≥ 8

Solution

$X$ takes values $0,1,2,\cdots, 9$ and follows $U(0,9)$ distribution.

$$ P(X=x) = \frac{1}{10}; \quad x=0,1,2\cdots, 9 $$

a. $P(X=6) = \frac{1}{10} = 0.1$

b. $P(X<3) = P(X=0) + P(X=1) + P(X=2) = 0.3$

c. $P(X\geq 8) = P(X=8) + P(X=9) = 0.2$

Part 2: Continuous Uniform Distribution

Definition of Continuous Uniform Distribution

A continuous random variable $X$ is said to have a Uniform distribution (or rectangular distribution) with parameters $\alpha$ and $\beta$ if its probability density function (pdf) is given by

$$ \begin{equation*} f(x)=\left\{ \begin{array}{ll} \frac{1}{\beta - \alpha}, & \hbox{$\alpha \leq x\leq \beta$;} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

Notation: $X\sim U(\alpha, \beta)$.

Verification of PDF

Clearly, $f(x)\geq 0$ for all $\alpha \leq x\leq \beta$ and

$$ \begin{eqnarray*} \int_\alpha^\beta f(x) \; dx &=& \int_\alpha^\beta \frac{1}{\beta-\alpha}\;dx\\ &=& \frac{1}{\beta - \alpha}\int_\alpha^\beta \;dx\\ &=& \frac{\beta-\alpha}{\beta - \alpha} = 1. \end{eqnarray*} $$

Hence $f(x)$ is a legitimate probability density function.

Standard Uniform Distribution

The case where $\alpha=0$ and $\beta=1$ is called the standard uniform distribution. The pdf is:

$$ \begin{equation*} f(x)=\left\{ \begin{array}{ll} 1, & \hbox{$0 \leq x\leq 1$;} \\ 0, & \hbox{Otherwise.} \end{array} \right. \end{equation*} $$

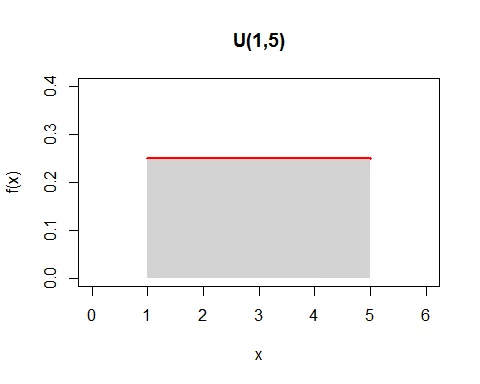

Graph of Continuous Uniform Distribution

Following is the graph of pdf of continuous uniform distribution with parameters $\alpha=1$ and $\beta=5$.

Note that the base of the rectangle is $\beta-\alpha$ and the height is $\dfrac{1}{\beta-\alpha}$, so the area is 1.

Distribution Function

The distribution function (CDF) of uniform distribution $U(\alpha,\beta)$ is:

$$ \begin{equation*} F(x)=\left\{ \begin{array}{ll} 0, & \hbox{$x<\alpha$;}\\ \dfrac{x-\alpha}{\beta - \alpha}, & \hbox{$\alpha \leq x\leq \beta$;} \\ 1, & \hbox{$x>\beta$.} \end{array} \right. \end{equation*} $$

Proof

$$ \begin{eqnarray*} F(x) &=& P(X\leq x) \\ &=& \int_\alpha^x f(x)\;dx\\ &=& \dfrac{1}{\beta - \alpha}\int_\alpha^x \;dx\\ &=& \dfrac{x-\alpha}{\beta - \alpha}. \end{eqnarray*} $$

Mean of Continuous Uniform Distribution

The mean of uniform distribution is $E(X) = \dfrac{\alpha+\beta}{2}$.

Proof

$$ \begin{eqnarray*} E(X) &=& \int_{\alpha}^\beta xf(x) \; dx\\ &=& \int_{\alpha}^\beta x\frac{1}{\beta-\alpha}\; dx\\ &=& \frac{1}{\beta-\alpha} \bigg[\frac{x^2}{2}\bigg]_\alpha^\beta\\ &=& \frac{1}{\beta-\alpha} \cdot\frac{\beta^2-\alpha^2}{2}\\ &=& \frac{1}{\beta-\alpha} \cdot\frac{(\beta-\alpha)(\beta+\alpha)}{2}\\ &=& \frac{\alpha+\beta}{2} \end{eqnarray*} $$

Variance of Continuous Uniform Distribution

The variance of uniform distribution is $V(X) = \dfrac{(\beta - \alpha)^2}{12}$.

Proof

$$ \begin{equation*} V(X) = E(X^2) - [E(X)]^2. \end{equation*} $$

Let us find the expected value of $X^2$:

$$ \begin{eqnarray*} E(X^2) &=& \int_{\alpha}^\beta x^2\frac{1}{\beta-\alpha}\; dx\\ &=& \frac{1}{\beta-\alpha} \bigg[\frac{x^3}{3}\bigg]_\alpha^\beta\\ &=& \frac{1}{\beta-\alpha} \cdot\frac{(\beta-\alpha)(\beta^2+\alpha\beta +\alpha^2)}{3}\\ &=& \frac{\beta^2+\alpha\beta +\alpha^2}{3} \end{eqnarray*} $$

Thus, variance of $X$ is:

$$ \begin{eqnarray*} V(X) &=&E(X^2) - [E(X)]^2\\ &=&\frac{\beta^2+\alpha\beta +\alpha^2}{3}-\bigg(\frac{\alpha+\beta}{2}\bigg)^2\\ &=&\frac{(\beta-\alpha)^2}{12}. \end{eqnarray*} $$

Standard deviation: $\sigma =\sqrt{\dfrac{(\beta-\alpha)^2}{12}}$.

Raw Moments

The $r^{th}$ raw moment of uniform distribution is:

$$ \begin{equation*} \mu_r^\prime = \frac{\beta^{r+1}-\alpha^{r+1}}{(r+1)(\beta-\alpha)} \end{equation*} $$

Mean Deviation

The mean deviation about mean is:

$$ \begin{equation*} E[|X-\mu_1^\prime|] = \frac{\beta-\alpha}{4}. \end{equation*} $$

Moment Generating Function

The MGF of uniform distribution is:

$$ \begin{equation*} M_X(t) = \frac{e^{t\beta}-e^{t\alpha}}{t(\beta-\alpha)},\; t\neq 0. \end{equation*} $$

Proof

$$ \begin{eqnarray*} M_X(t) &=& E(e^{tX}) \\ &=& \frac{1}{\beta-\alpha}\int_\alpha^\beta e^{tx} \; dx\\ &=& \frac{1}{\beta-\alpha}\bigg[\frac{e^{tx}}{t}\bigg]_\alpha^\beta\\ &=& \frac{e^{t\beta}-e^{t\alpha}}{t(\beta-\alpha)}. \end{eqnarray*} $$

Characteristics Function

The characteristics function is:

$$ \begin{equation*} \phi_X(t) = \frac{e^{it\beta}-e^{it\alpha}}{it(\beta-\alpha)},\; t\neq 0. \end{equation*} $$

Continuous Uniform Distribution Examples

Example 1: Bus Stop Waiting Time

The waiting time at a bus stop is uniformly distributed between 1 and 12 minutes.

a. What is the probability density function? b. What is the probability that the rider waits 8 minutes or less? c. What is the expected waiting time? d. What is the standard deviation?

Solution

Let $X$ denote waiting time. $X\sim U(1,12)$.

a. Probability density function:

$$ \begin{aligned} f(x) & = \frac{1}{12-1} = \frac{1}{11},\; 1\leq x \leq 12. \end{aligned} $$

b. Probability waits 8 minutes or less:

$$ \begin{aligned} P(X\leq 8) & = \int_1^8 f(x) \; dx = \frac{1}{11}\int_1^8 \; dx\\ & = \frac{1}{11}[8-1] = \frac{7}{11} = 0.6364. \end{aligned} $$

c. Expected waiting time:

$$ E(X) =\frac{1+12}{2} = 6.5 \text{ minutes} $$

d. Standard deviation:

$$ \sigma = \sqrt{\frac{(12-1)^2}{12}} = \sqrt{10.08} \approx 3.17 \text{ minutes} $$

Example 2: Vehicle Weight

Assume the weight of a randomly chosen car is uniformly distributed between 2,500 and 4,500 pounds.

a. What is the mean and standard deviation? b. What is the probability that a vehicle weighs less than 3,000 pounds? c. What is the probability it weighs more than 3,900 pounds? d. What is the probability it weighs between 3,000 and 3,800 pounds?

Solution

$X\sim U(2500, 4500)$.

The pdf is:

$$ f(x)=\frac{1}{2000},\quad 2500 \leq x\leq 4500 $$

The CDF is:

$$ F(x)=\frac{x-2500}{2000},\quad 2500 \leq x\leq 4500 $$

a. Mean and standard deviation:

$$ E(X) = \frac{2500+4500}{2} = 3500 \text{ pounds} $$

$$ \sigma = \sqrt{\frac{(4500-2500)^2}{12}} = 577.35 \text{ pounds} $$

b. Probability less than 3,000 pounds:

$$ P(X< 3000) = F(3000) = \frac{3000 - 2500}{2000} = 0.25 $$

c. Probability more than 3,900 pounds:

$$ P(X>3900) = 1-F(3900) = 1- \frac{1400}{2000} = 0.3 $$

d. Probability between 3,000 and 3,800 pounds:

$$ P(3000< X<3800) = F(3800) - F(3000) = 0.65 - 0.25 = 0.4 $$

Comparison: Discrete vs Continuous Uniform Distribution

| Property | Discrete $U(a,b)$ | Continuous $U(\alpha,\beta)$ |

|---|---|---|

| Variable Type | Discrete | Continuous |

| Range | $a, a+1, \ldots, b$ | $[\alpha, \beta]$ |

| PMF/PDF | $\frac{1}{b-a+1}$ | $\frac{1}{\beta-\alpha}$ |

| Mean | $\frac{a+b}{2}$ | $\frac{\alpha+\beta}{2}$ |

| Variance | $\frac{(b-a+1)^2-1}{12}$ | $\frac{(\beta-\alpha)^2}{12}$ |

| CDF | $\frac{x-a+1}{b-a+1}$ | $\frac{x-\alpha}{\beta-\alpha}$ |

Properties Summary Table

| Property | Formula (Discrete) | Formula (Continuous) |

|---|---|---|

| Domain | $[a, b]$ integers | $[\alpha, \beta]$ reals |

| Mean | $\frac{a+b}{2}$ | $\frac{\alpha+\beta}{2}$ |

| Variance | $\frac{(b-a+1)^2-1}{12}$ | $\frac{(\beta-\alpha)^2}{12}$ |

| Std Dev | $\sqrt{\frac{(b-a+1)^2-1}{12}}$ | $\frac{\beta-\alpha}{2\sqrt{3}}$ |

When to Use Uniform Distribution

Discrete Uniform Distribution

- Rolling a fair die

- Random selection from a finite set

- Quality control with equally likely outcomes

- Lottery drawings

- Equal probability selection

Continuous Uniform Distribution

- Waiting times when equally likely

- Round-off errors

- Random number generation (basis for transforming to other distributions)

- Geometric problems with uniform spatial distribution

- Any situation with constant probability density over an interval

Key Characteristics

Advantages

- Simple and mathematically tractable

- Useful baseline for comparisons

- Computationally efficient

- Clear interpretation

Applications

- Simulation and random sampling

- Queuing theory

- Quality control

- Reliability analysis

- Risk assessment

- Monte Carlo methods

Conclusion

The uniform distribution, both discrete and continuous, is fundamental in probability theory and statistics. Its simplicity makes it an excellent choice for:

- Modeling scenarios with equal likelihood across a range

- Generating random variates for simulation

- Approximating more complex distributions in certain contexts

- Serving as a baseline for statistical comparisons

Understanding both variants is essential for applied statistics, simulation, and probability-based decision-making in various fields including engineering, finance, and quality control.